“Awaiting Action”, Automatic Credit Granting, and the “Link Clicked” Date

Published April 17, 2023

Awaiting Action or Not?

When uncredited timeslots appear from studies with automatic credit granting

Generally speaking, automatic credit granting makes recruiting and compensating participants almost too easy. Partly this is due to the nature of online studies:

- Online studies, especially surveys, can be conducted automatically (without a researcher present)

- Online studies can be conducted at any time and from (almost) anywhere.

There are, certainly, downsides to eschewing the benefits of the lab, but our goal here isn’t to weigh the pros and cons of online vs. in-person settings. Rather, it’s to field a common question researchers running online studies ask: “Why are participants in my study showing up under “Awaiting Action” when I set the study up to automatically grant credit?”

This question comes up often enough that we thought a more permanent, accessible answer would be a useful resource. Hence this post, where we’ll be addressing not only the question and its answer, but also how to most easily and optimally handle participants showing up unexpectedly in the “Awaiting Action” list. With this goal in mind, let’s turn back to the question itself: Why might researchers find participants in their online study, one that is supposed to be set up for automatic credit granting, winding up in the “Awaiting Action” list on the Uncredited Timeslots page?

We should point out that this kind of situation is one reason we have a combined “Past and Online” tab for viewing uncredited timeslots. Also, it’s worth mentioning that the first step is to make sure that the study is properly configured for automatic credit granting. Most of the time, the problem is an error configuring the study for integration with Sona, and fixing it will do the trick.

That said, it is indeed possible for an online study to be correctly configured for integration and yet for researchers to find participants listed among those “Awaiting Action” with uncredited timeslots. This is no doubt confusing to many researchers, who were looking forward to all the perks of Sona’s third-party online integration capabilities. Then, contrary to their expectations, they find participants on the Uncredited Timeslots page that they thought (with good reason) automatic credit granting would take care of.

As frustrating as this may be, the good news is that this is often simply due to the way automatic credit granting works. The better news is that often those “Awaiting Action” in these cases may actually not require any action on the researcher’s part at all. This may seem a bit counterintuitive. It really isn’t, provided one has the proper context. This means we have to delve into timeslots a bit.

A lot of the confusion stems from thinking about timeslots and participation for online studies from the perspective of in-person studies. But their are some important distinctions that, if not taken into can lead precisely to the central question this post answers. It begins with the distinction in timeslots. For in-person studies, timeslots have a clear beginning and end time. Participants enter a room or lab or some other physical location when the study begings, and leave when it ends. Once the timeslot for an in-person study is over and the participants have left, researchers log into Sona and mark participation for everyone who showed (and, if necessary, mark those who didn’t as no-shows).

Online studies do not work this way. It’s not just that the “location” is e.g., a website rather than, say, a neuroimaging lab. It’s timeslots. Typically, online studies only have one. Also, this “timeslot” is more like the entire sign-up period: It starts when the online study is first made available to participants, and it doesn’t become truly “past” until after the selected end date and time of the online study.

Taking a Closer Look at Tabs & Timeslots

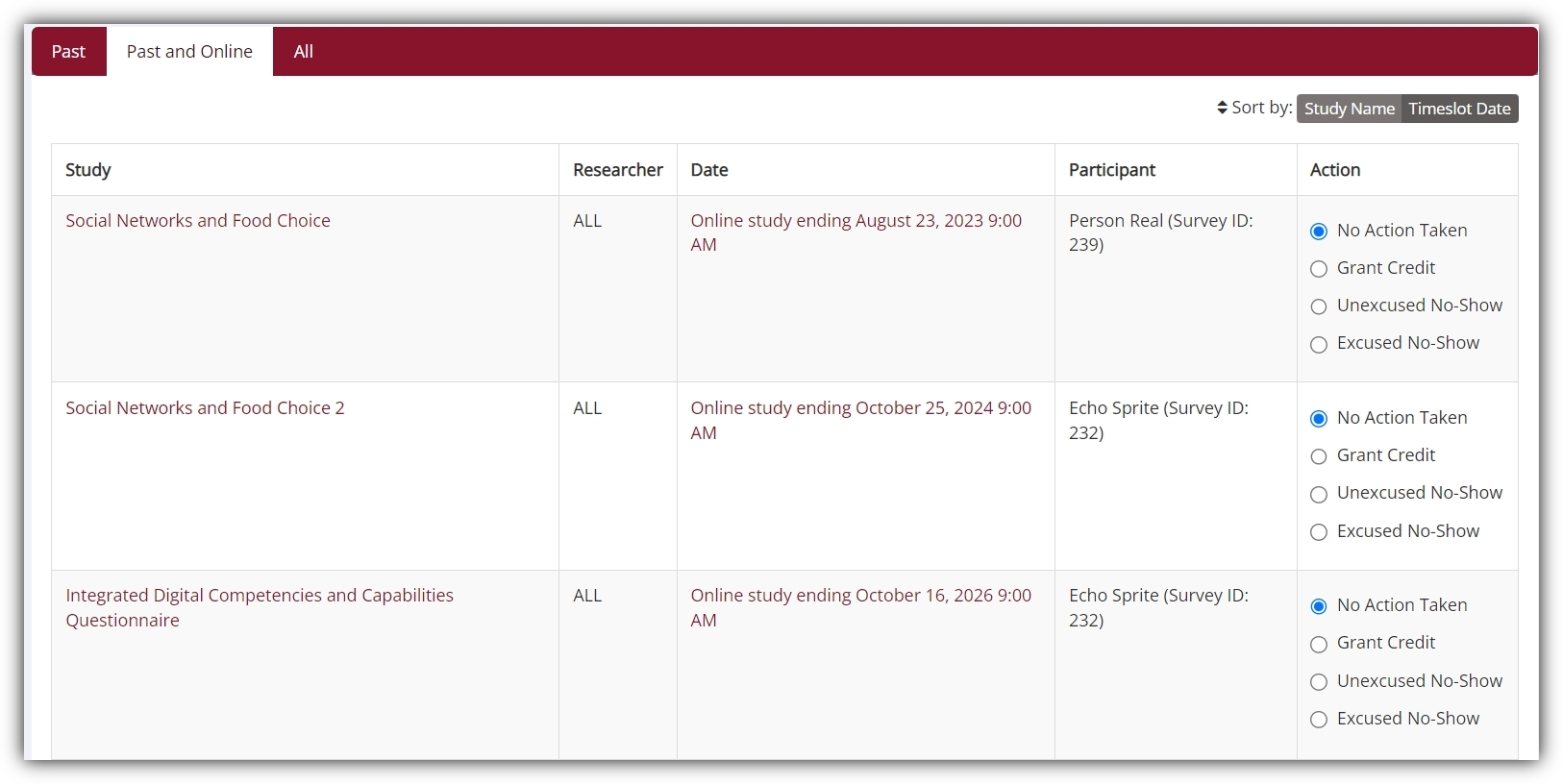

What, then, does this have to do with the Uncredited Timeslots page and why it can appear that automatic credit granting isn’t working? We’ll answer this rhetorical question with a hypothetical one: What happens if a participant signs up for an online study (one properly configured for integration), but doesn’t start the online study immediately? The answer is that the participant will appear on the table in the “Past and Online” tab view on the Uncredited Timeslots page:

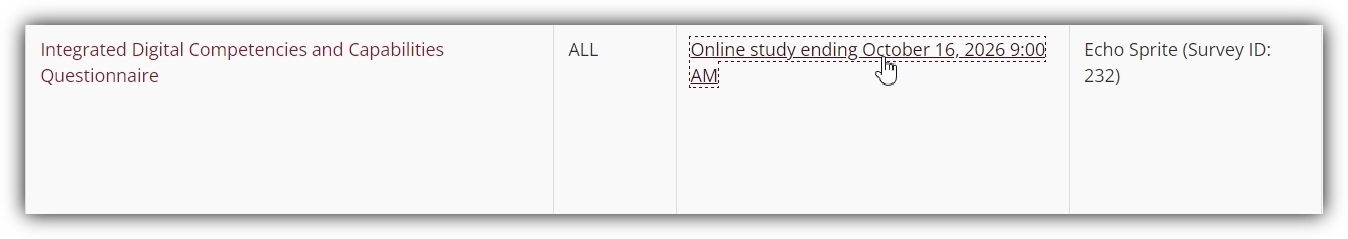

But how can we know that the reason these participants are showing up on the Uncredited Timeslots page is because they haven’t completed (perhaps haven’t even started) the online survey? The answer is only a click and a half away, starting with the clickable timeslot link:

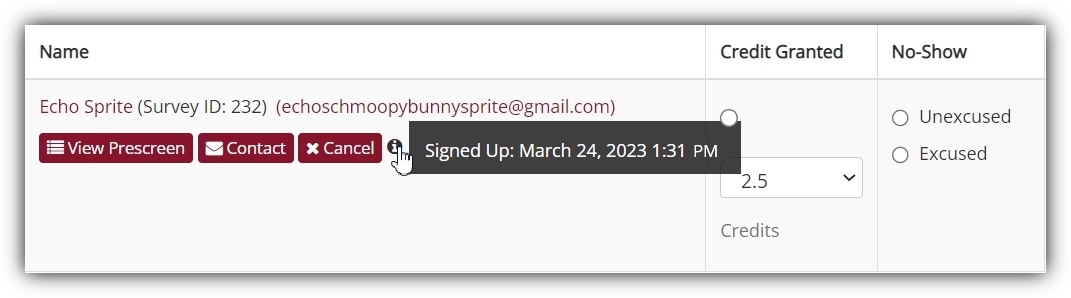

We could have clicked on the study itself, which would bring us to the study information page, but clicking on the timeslot leads us directly to the place where we’ll find our answer. Let’s remind ourselves of what we’re asking here: This study is set up to grant credit automatically, so we want to know why this participant is showing up on the Uncredited Timeslots page and, in particular, if it is because the participant hasn’t finished or hasn’t started the survey. We don’t even need to click on anything more to find out, thanks to Sona’s Tooltip feature, which is why this only counts as half a click (for a total of 1.5 from the Uncredited Timeslots page):

As you can see, the participant has signed up for the study, but there is no “link clicked” date and time (let alone a “Participation Marked” time). This means the participant signed up for the study but did not click on the link to begin it. In short, nothing needs to be done. It’s almost as if the “timeslot” for this participant is in the future, and will be taken care of automatically once the participant completes the survey they’ve yet to start.

This begs the question, though, of what would happen if the participant had clicked on the link, but then wasn’t able to continue?

Putting the “Tool” in Tooltip: Making Use of the “Link Clicked” Information

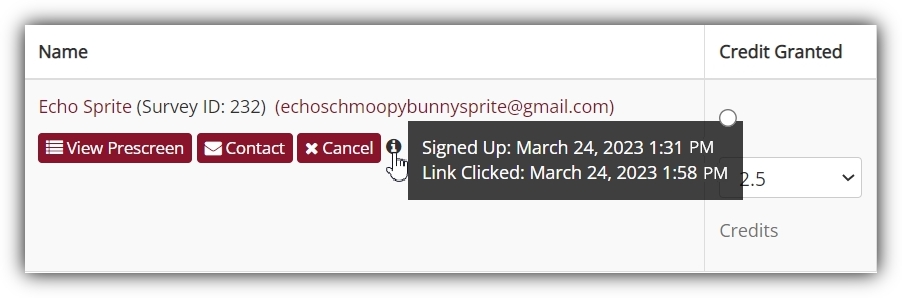

We just had the bad luck to check on the uncredited timeslots during these few minutes. But, in this highly unrealistic scenario, we’re going to further imagine that, given his single-minded devotion to tasks at hand, we may have just caught him in the few minutes between completing his sign-up and starting the survey. Unrealistic, yes. Educational? Much more so:

It’s a few minutes later and, as expected, Echo now has a link clicked time and date. We happen to know that Echo will undoubtedly continue this survey in his typical, dedicated fashion, but we have to make sure not to push this example to far. Yes, it is theoretically possible that a participant with a “link clicked” date and no “participation marked” date/time indicates an “in progress” survey. In all probability, however, if you see a “link clicked” but no “participation marked” on the tooltip info box, it means the participant stopped the survey without completing it (assuming, of course, that you have correctly configured your online, external study to redirect participants back to your Sona site, as required for integration to be successful). Maybe they temporarily lost internet access, maybe their laptop died, or maybe a gathering down the hall became far too noisy to make concentration possible and they stopped to return to the survey at a more convenient time. The list could go on, but you get the idea.

Regardless of why the participant hasn’t completed the survey, finding a “link clicked” time and date indicates that the participant did start the survey, and that they were never redirected back to Sona to have their participation marked. This could mean that the survey wasn’t set up properly, but as we’re assuming here that it was, a “link clicked” date/time with no corresponding “Participation Marked” indicates that the survey wasn’t finished.

Now comes the part where “Awaiting Action” actually involves some action. Luckily, the Past and Online tab view on the Uncredited Timeslots page, combined with Tooltip information, is now our friend (rather than a cause for any worry about why the system isn’t handling credit granting/participation marking automatically). If a participant has a “link clicked” date but no “Participation Marked”, chances are the researcher will need to manually grant credit for participation. This is true even if the participant later finishes the survey.

Awaiting What Action? Qualifying the “need” to act

Before finishing up on the only section in this post that actually involves taking action for those “Awaiting Action”, it’s worth clarifying what “will need” means in the above statement “the researcher will need to manually grant credit”. Here, the word “need” part doesn’t mean granting credit in is mandatory, or is somehow necessary, or that if it is not done, then the result will be catastrophic system failure, total protonic reversal, and the end of all life as we know it. It simply means that if the researcher has decided to grant credit, then in this case they will need to do so manually. But their decision is just that- a decision. And it is contingent upon the relevant context or relevant information they have (such as the fact that the deadline is over so the participant won’t be able to continue the survey at a later time, or the fact that they’ve matched the participant’s “link clicked” date and time with a nearly complete set of responses from one participant collected using their study’s online survey platform).

On the other hand, if the researcher notes that a participant has a “link clicked” date and time that doesn’t correspond to any timestamps on the responses they collected via their online survey platform, they may decide that a minimal level of participation wasn’t met. Alternatively, if the deadline hasn’t passed, then taking no action may be the optimal method, as the participant may simply wish to finish the survey later. In both of these cases, once again the participant “Awaiting Action” requires minimal actual action. Also, granting or not granting credit is often very context dependent and involves a great deal of discretion on the researcher’s part. That said, typically the decision not to grant credit involves one of two general considerations: Whether the participant a) failed to complete enough of the survey and for reasons having nothing to do with their ethical rights or b) will likely complete the survey at a later date.

If the researcher’s decision to grant credit involves consideration a) above (i.e., failure to complete enough of the survey, and not because the participant exercised their ethical right to stop at any time), the “link clicked” date and time can be used for more than just determining whether to grant credit. After all, in statistical inferences and study findings don’t depend upon whether or not participants receive credit, they depend upon data. The most common statistical paradigms make implicit assumptions about effect sizes or sampling distributions and the like that can depend upon drop-outs. Also, and perhaps more importantly, missing data is a more general problem to which there is no perfect solution from a statistical perspective, but it’s always helpful to have additional information about which set of responses (i.e., which participant’s responses), if any, are incomplete.

In short, researchers can use the “link clicked” dates and times for comparison with survey data collected to inform analysis and for handling their data using whatever statistical methods they wish (including, in the case of participants who start over, controlling for repeated IDs). But credit granting/participation marking in such cases should be handled by the researcher, a process that can be completed on the very page containing the tooltip information.

Wrapping it Up

The good news is that, “Awaiting Action” for online studies set up to mark participation automatically doesn’t require much actual “action.” Even if participant sign-ups don’t disappear once the participant actually starts the study (i.e., in cases where there is no “link clicked” date and time for a sign-up), then researchers can easily determine when they started the survey and match the “link clicked” date and time with those from the their survey response if they want to make sure that the participant did indeed start the start the survey. But that’s more an issue of data analysis than participation marking and credit granting.

The “link clicked” date and time indicates that the participant started the survey, which is the equivalent of a participant showing up for a study at the correct time and place. So the only action needed is to simply mark participation and compensate accordingly, which can be done without leaving the page!